MPI+OpenMP: he-hy - Hello Hybrid! - compiling, starting

Prepare for these Exercises:

cd ~/HY-LRZ/he-hy # change into your he-hy directory

Contents:

job_*.sh # job-scripts to run the provided tools, 2 x job_*_exercise.sh

*.[c|f90] # various codes (hello world & tests) - NO need to look into these!

coolmuc2/coolmuc2_slurm.out_* # coolmuc2 output files --> sorted (note: physical cores via modulo 28)

IN THE ONLINE COURSE he-hy shall be done in two parts:

IN THE ONLINE COURSE he-hy shall be done in two parts:

first exercise = 1. + 2. + 3. + 4.

second exercise = 5. + 6. + 7. (after the talk on pinning)

1. FIRST THINGS FIRST - PART 1: find out about a (new) cluster - login node

module (avail, load, list, unload); compiler (name & --version)

Some suggestions what you might want to try:

module list # are there any default modules loaded ? are these okay ?

module list # are there any default modules loaded ? are these okay ?

# ==> default modules on CoolMUC2 seem to be okay

module avail # which modules are available ?

# default versions ? latest versions ? spack ?

# ==> look for: compiler, mpi, likwid

module load likwid # let's try to load a module...

likwid-topology -c -g # ... and use it on the login node

module unload likwid # clean up ...

# decide on the modules to be used - we'll use the default on CoolMUC2 (Intel compiler and Intel MPI library)

module list # list modules loaded

echo $CC # check compiler names

echo $CXX #

echo $F90 #

icc --version # check versions for standard Intel compilers

icpc --version #

ifort --version #

mpi<tab><tab> # figure out which MPI wrappers are available

mpi<tab><tab> # figure out which MPI wrappers are available

mpicc --version # try standard names for the MPI wrappers

mpicxx --version #

mpif90 --version # ==> okay in CoolMUC2 (but might give unexpected compilers on other clusters...)

mpiicc --version # try special names, e.g., for Intel...

mpiicpc --version #

mpiifort --version # ==> okay in CoolMUC2 (these should work with Intel, always, note the double ii)

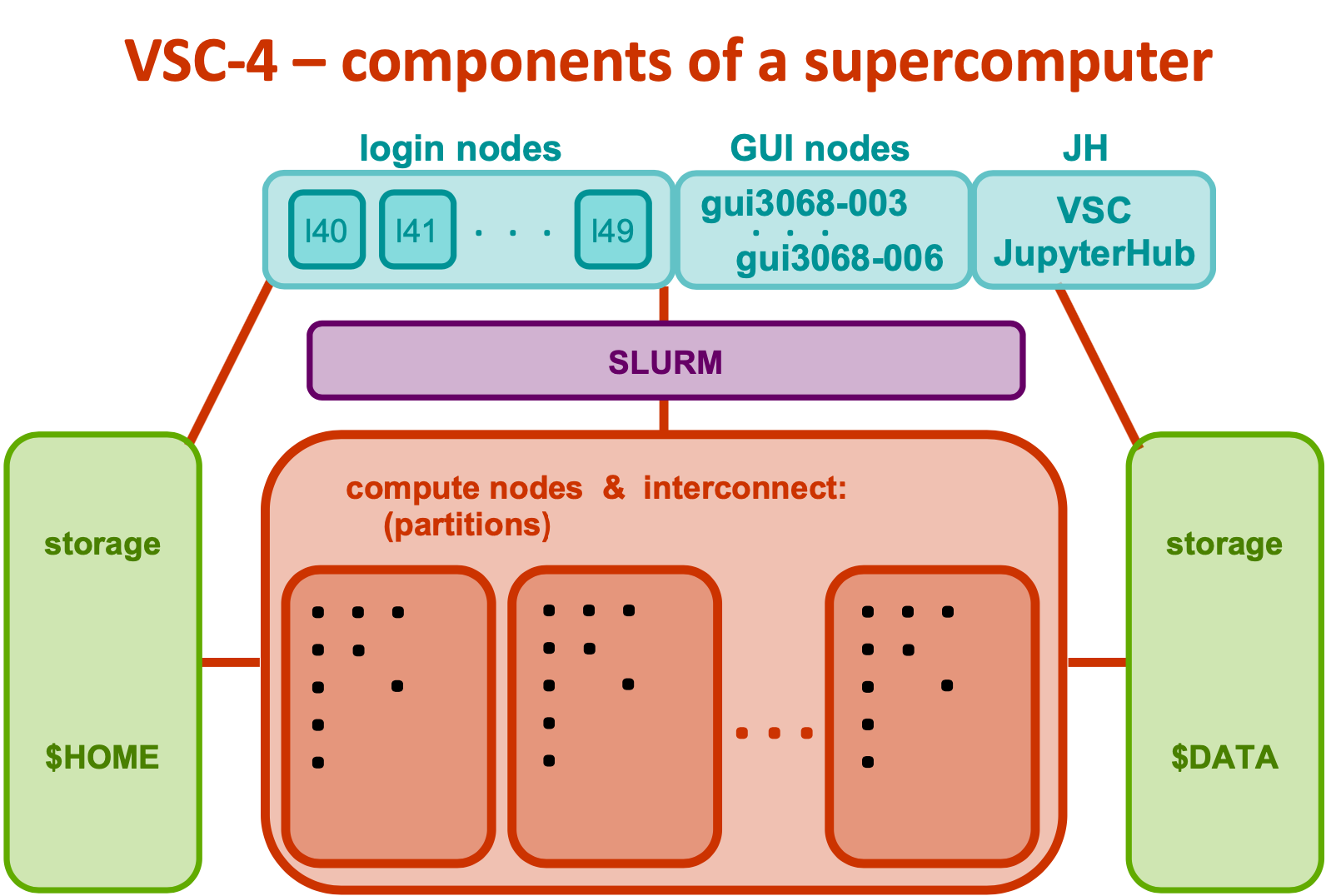

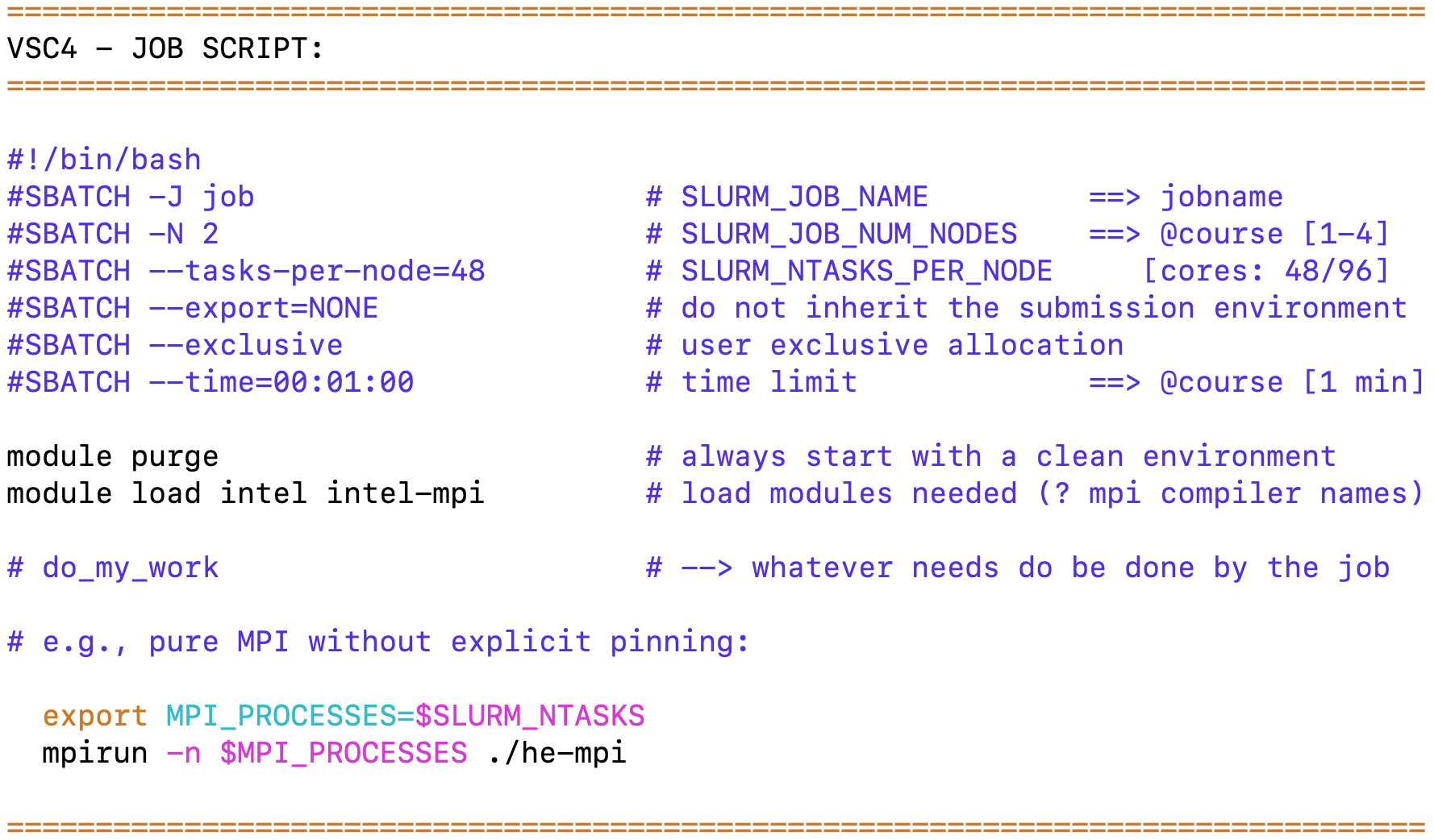

2. FIRST THINGS FIRST - PART 2: find out about a (new) cluster - batch jobs

job environment, job scripts (clean) & batch system (SLURM); test compiler and MPI version

job environment, job scripts (clean) & batch system (SLURM); test compiler and MPI version

job_env.sh, job_te-ve_[c|f].sh, te-ve*

! there might be several hardware partitions and qos on a cluster & a default !

! you have to check all hardware partitions you would like to use separately !

! in the course we have a node reservation (it's switched on in the job scripts) !

SLURM (CoolMUC2):

SLURM (CoolMUC2):

sbatch job*.sh # submit a job

sbatch job*.sh # submit a job

squeue -M cm2 # check own jobs

scancel -M cm2 jobid # cancel a job

output will be written to: slurm-*.out # output

sinfo -M cm2 --reservation # show reservation

sbatch job_env.sh # check job environment

mpiicc -o te-ve te-ve.c | mpiifort -o te-ve_f08 te-ve-mpi_f08.f90 # compile on the login node

| mpiifort -o te-ve_old te-ve-mpi_old.f90

| mpiifort -o te-ve_key te-ve-mpi_old-key.f90 # if compilation fails, no keyword based arg lists

sbatch job_te-ve_c.sh | sbatch job_te-ve_f.sh # submit job --> test version (te-ve)

3. MPI+OpenMP: :TODO: how to compile and start an application

how to do conditional compilation

job_co-co_[c|f].sh, co-co.[c|f90]

Recap with Intel compiler & Intel MPI (→ see also slide 29):

| compiler: | ? USE_MPI | ? _OPENMP | START APPLICATION: | |||

|---|---|---|---|---|---|---|

| C: | export OMP_NUM_THREADS=# | |||||

| with MPI | mpiicc | -DUSE_MPI | -qopenmp | … | mpirun -n # ./<exe> | |

| no MPI | icc | -qopenmp | … | ./<exe> | ||

| Fortran: | export OMP_NUM_THREADS=# | |||||

| with MPI | mpiifort | -fpp | -DUSE_MPI | -qopenmp | … | mpirun -n # ./<exe> |

| no MPI | ifort | -fpp | -qopenmp | … |

./<exe> |

TODO:

→ Compile and Run (4 possibilities) co-co.[c|f90] = Demo for conditional compilation.

→ Do it by hand - compile on the login node & submit as job

→ It's available as a script (needs editing): job_co-co_[c|f].sh

→ Have a look into the code: co-co.[c|f90] to see how it works.

Always check compiler names and versions, e.g. with: mpiicc --version !!!

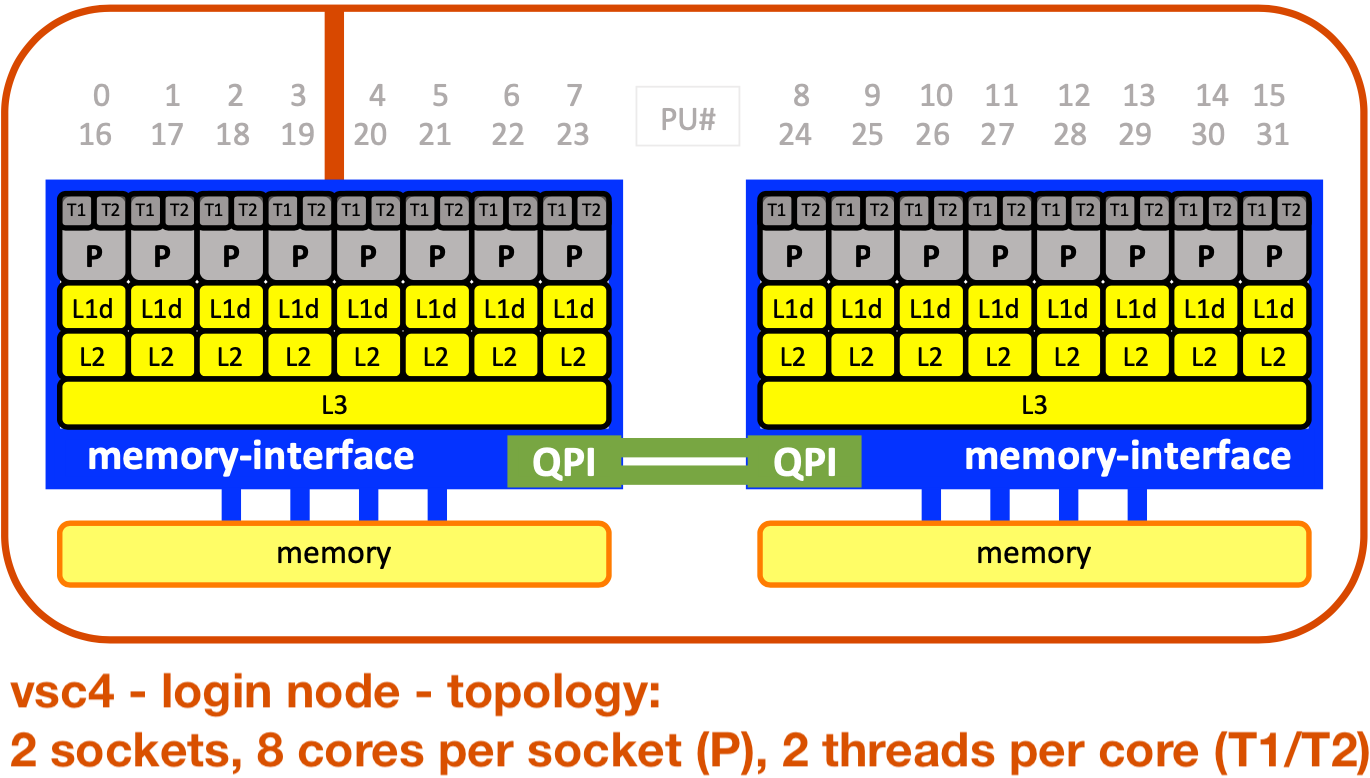

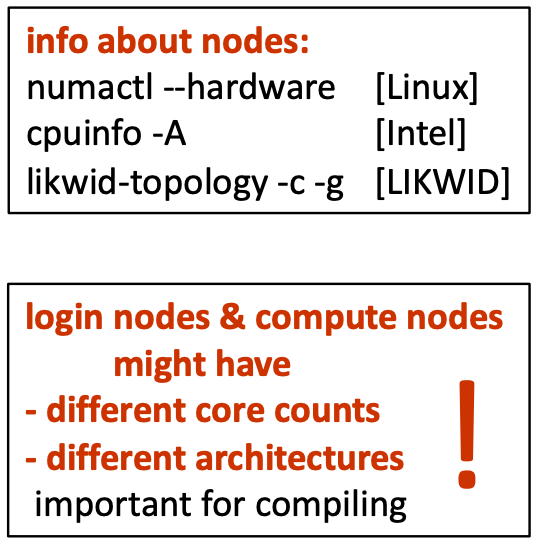

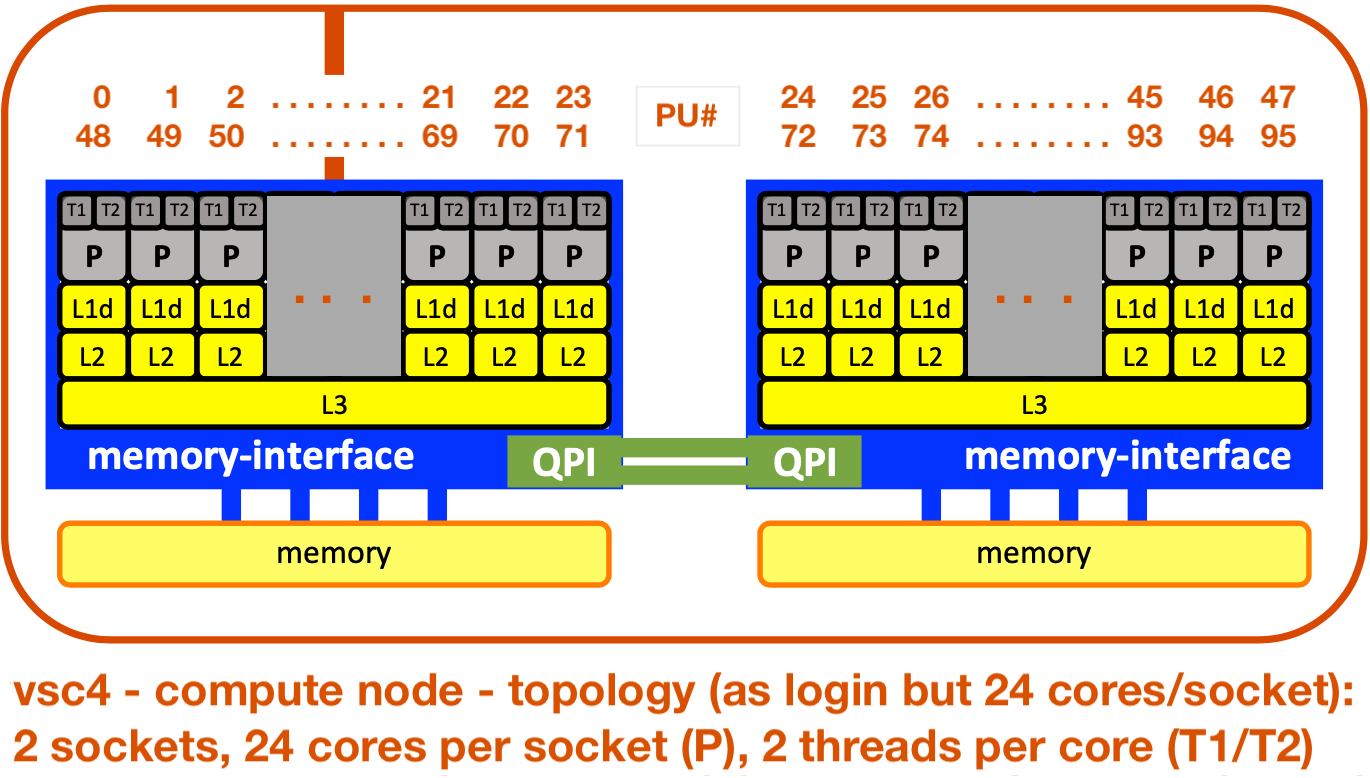

4. MPI+OpenMP: :TODO: get to know the hardware - needed for pinning

(→ see also slide 30)

TODO:

→ Find out about the hardware of compute nodes:

→ Find out about the hardware of compute nodes:

→ Write and Submit: job_check-hw_exercise.sh

→ Describe the compute nodes... (core numbering?)

→ solution = job_check-hw_solution.sh

→ solution.out = coolmuc2/coolmuc2_slurm.out_check-hw_solution